- Load bulk data into sequel pro manual#

- Load bulk data into sequel pro code#

- Load bulk data into sequel pro download#

Load bulk data into sequel pro code#

A Lambda function with a timeout of 15 minutes, which contains the code to import the CSV data into DynamoDB.A DynamoDB table with on-demand for read/write capacity mode.A private S3 bucket configured with an S3 event trigger upon file upload.The template deploys the following resources:

Load bulk data into sequel pro download#

Follow the instructions to download the CloudFormation template for this solution from the GitHub repo. There is now a more efficient, streamlined solution for bulk ingestion of CSV files into DynamoDB. In addition, this solution can become costly because you incur additional costs for using these three underlying AWS services.īulk CSV ingestion from Amazon S3 into DynamoDB

However, this solution requires several prerequisite steps to configure Amazon S3, AWS Data Pipeline, and Amazon EMR to read and write data between DynamoDB and Amazon S3.

AWS Data Pipeline – You can import data from Amazon S3 into DynamoDB using AWS Data Pipeline. Therefore, if you receive bulk data in CSV format, you cannot easily use the AWS CLI for insertion into DynamoDB. Although JSON data is represented as key-value pairs and is therefore ideal for non-relational data, CSV files are more commonly used for data exchange. However, this process requires that your data is in JSON format. AWS CLI – Another option is using the AWS CLI to load data into a DynamoDB table. Therefore, ingesting data via the console is not a viable option. For bulk ingestion, this is a time-consuming process. You must add each key-value pair by hand. AWS Management Console – You can manually insert data into a DynamoDB table, via the AWS Management Console. The following AWS services offer solutions, but each poses a problem when inserting large amounts of data: There are several options for ingesting data into Amazon DynamoDB. An IAM user with access to DynamoDB, Amazon S3, Lambda, and AWS CloudFormation.Ĭurrent solutions for data insertion into DynamoDB. To complete the solution in this post, you need the following: In addition to DynamoDB, this post uses the following AWS services at a 200–300 level to create the solution: This bulk ingestion is key to expediting migration efforts, alleviating the need to configure ingestion pipeline jobs, reducing the overall cost, and simplifying data ingestion from Amazon S3. This data is often in CSV format and may already live in Amazon S3. A popular use case is implementing bulk ingestion of data into DynamoDB. Today, hundreds of thousands of AWS customers have chosen to use DynamoDB for mobile, web, gaming, ad tech, IoT, and other applications that need low-latency data access. It also presents a streamlined solution for bulk ingestion of CSV files into a DynamoDB table from an Amazon S3 bucket and provides an AWS CloudFormation template of the solution for easy deployment into your AWS account.Īmazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale. In the error message, Amazon and USD are values for the fields that follow date ( country and currency) in each row.This post reviews what solutions exist today for ingesting data into Amazon DynamoDB. ) ENGINE=InnoDB AUTO_INCREMENT=11 DEFAULT CHARSET=utf8 `Average Delivery Cost` varchar(255) DEFAULT NULL, Offer Price without VAT` varchar(255) DEFAULT NULL, `Average File Size` float(5,2) DEFAULT NULL, List Price without VAT` decimal(19,2) DEFAULT NULL,

AWS Data Pipeline – You can import data from Amazon S3 into DynamoDB using AWS Data Pipeline. Therefore, if you receive bulk data in CSV format, you cannot easily use the AWS CLI for insertion into DynamoDB. Although JSON data is represented as key-value pairs and is therefore ideal for non-relational data, CSV files are more commonly used for data exchange. However, this process requires that your data is in JSON format. AWS CLI – Another option is using the AWS CLI to load data into a DynamoDB table. Therefore, ingesting data via the console is not a viable option. For bulk ingestion, this is a time-consuming process. You must add each key-value pair by hand. AWS Management Console – You can manually insert data into a DynamoDB table, via the AWS Management Console. The following AWS services offer solutions, but each poses a problem when inserting large amounts of data: There are several options for ingesting data into Amazon DynamoDB. An IAM user with access to DynamoDB, Amazon S3, Lambda, and AWS CloudFormation.Ĭurrent solutions for data insertion into DynamoDB. To complete the solution in this post, you need the following: In addition to DynamoDB, this post uses the following AWS services at a 200–300 level to create the solution: This bulk ingestion is key to expediting migration efforts, alleviating the need to configure ingestion pipeline jobs, reducing the overall cost, and simplifying data ingestion from Amazon S3. This data is often in CSV format and may already live in Amazon S3. A popular use case is implementing bulk ingestion of data into DynamoDB. Today, hundreds of thousands of AWS customers have chosen to use DynamoDB for mobile, web, gaming, ad tech, IoT, and other applications that need low-latency data access. It also presents a streamlined solution for bulk ingestion of CSV files into a DynamoDB table from an Amazon S3 bucket and provides an AWS CloudFormation template of the solution for easy deployment into your AWS account.Īmazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale. In the error message, Amazon and USD are values for the fields that follow date ( country and currency) in each row.This post reviews what solutions exist today for ingesting data into Amazon DynamoDB. ) ENGINE=InnoDB AUTO_INCREMENT=11 DEFAULT CHARSET=utf8 `Average Delivery Cost` varchar(255) DEFAULT NULL, Offer Price without VAT` varchar(255) DEFAULT NULL, `Average File Size` float(5,2) DEFAULT NULL, List Price without VAT` decimal(19,2) DEFAULT NULL,

`Transaction Type*` varchar(255) DEFAULT NULL, `Royalty Type` varchar(255) DEFAULT NULL, `Net Units Sold or KU/KOLL Units**` int(11) DEFAULT NULL, `key` int(11) unsigned NOT NULL AUTO_INCREMENT, It may be helpful to give you an idea of the table I'm importing into: The file comes up and the date field in the CSV is row 14:ģ) In the Expression window, add this code:ĥ) Get error above.

Load bulk data into sequel pro manual#

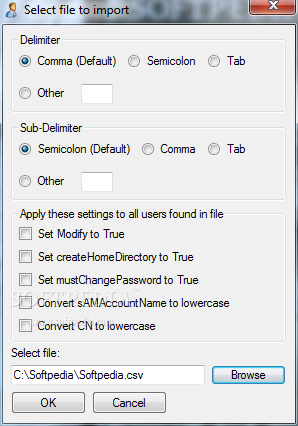

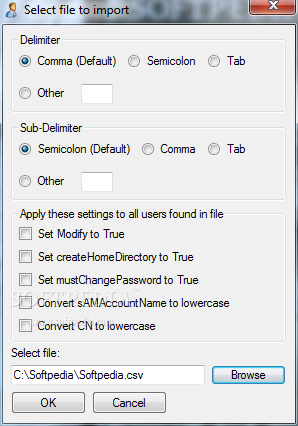

You have an error in your SQL syntax check the manual that corresponds to your MySQL server version for the right syntax to use near 'SET date = '%m/%d/%Y') ,'Amazon','USD')' at line 2ġ File > Import. I'm getting a bunch of these errors for each row: I know I need to use STR_TO_DATE, but I can't figure out the right syntax. I'm trying to import a file into a MySQL table using Sequel Pro.

0 kommentar(er)

0 kommentar(er)